Exploring Apple Intelligence: Integrating AI Tools into Your Swift Applications

With the constant evolution of technology, Apple continues to expand its capabilities in the field of Artificial Intelligence (AI). The latest release is Apple Intelligence, a powerful and optimized platform for developers looking to elevate their apps by integrating intelligent and personalized features. In this post, we will explore how Apple Intelligence can be used in your Swift projects.

What is Apple Intelligence?

Apple Intelligence is Apple's latest offering that combines AI with machine learning (ML) to provide highly personalized and powerful solutions for both developers and users. In iOS 18, Apple Intelligence expands even further, bringing new features and capabilities to apps.

The key features that Apple Intelligence will offer in iOS 18 include:

-

Core ML 4: The latest version of Core ML brings significant performance improvements to machine learning models and supports dynamic models, allowing apps to adapt models on the device in real time. Now, you can train and update models directly on the user's device without needing a cloud connection, making apps smarter and more responsive.

-

Vision Pro and AR Enhancements: iOS 18 includes deeper integration between AI and Augmented Reality (AR). Using the Vision and RealityKit frameworks, developers can create advanced visual experiences such as 3D object tracking, gesture recognition, and real-time contextual interactions, enhancing the quality and personalization of AR experiences.

-

Natural Language 3.0: The new version of the Natural Language framework allows for even more accurate and faster text analysis. With support for new languages and better accuracy in detecting sentiment, intent, and named entities, Natural Language 3.0 enables apps to better understand the context and emotion behind user messages, along with improved speech recognition and real-time transcription support.

-

Dynamic Personalization with On-Device Learning: In iOS 18, Apple Intelligence includes advanced on-device learning capabilities, allowing apps to personalize their features based on user behavior and preferences over time. This improves privacy since personal data does not need to be sent to external servers, keeping the information on the user's device.

-

Siri Enhanced with Contextual Intelligence: Siri in iOS 18 will be even more powerful, with improvements in context awareness. This allows developers to integrate more natural and personalized voice commands into their apps, along with new intelligent shortcuts based on user interactions and usage patterns.

-

Advanced Anomaly Detection: iOS 18 introduces machine learning-based anomaly detection for apps that monitor large volumes of data. This technology can be used in health, security, and finance apps, allowing them to detect unusual or unexpected patterns that can trigger automatic alerts.

-

Emotion and Sentiment Recognition in Images: Using the Vision and Core ML frameworks, developers can now integrate advanced emotion recognition in images and videos. This opens up possibilities for apps that analyze facial expressions and human emotions, such as in wellness or entertainment apps.

-

Privacy and Security Powered by AI: Apple continues its commitment to privacy by enabling AI models to perform complex tasks directly on the device. This means that sensitive data, such as text or image analyses, never needs to leave the device, helping protect user privacy while still offering intelligent insights.

How to Integrate Apple Intelligence in Swift Apps

If you’re developing in Swift, integrating Apple Intelligence can be a relatively straightforward process thanks to frameworks like Core ML. Below, we'll walk through how you can start using AI in your app.

1. Incorporating Pre-Trained Models (Core ML)

Core ML is the primary framework for incorporating machine learning models into Apple apps. With it, you can use pre-trained models or train your own.

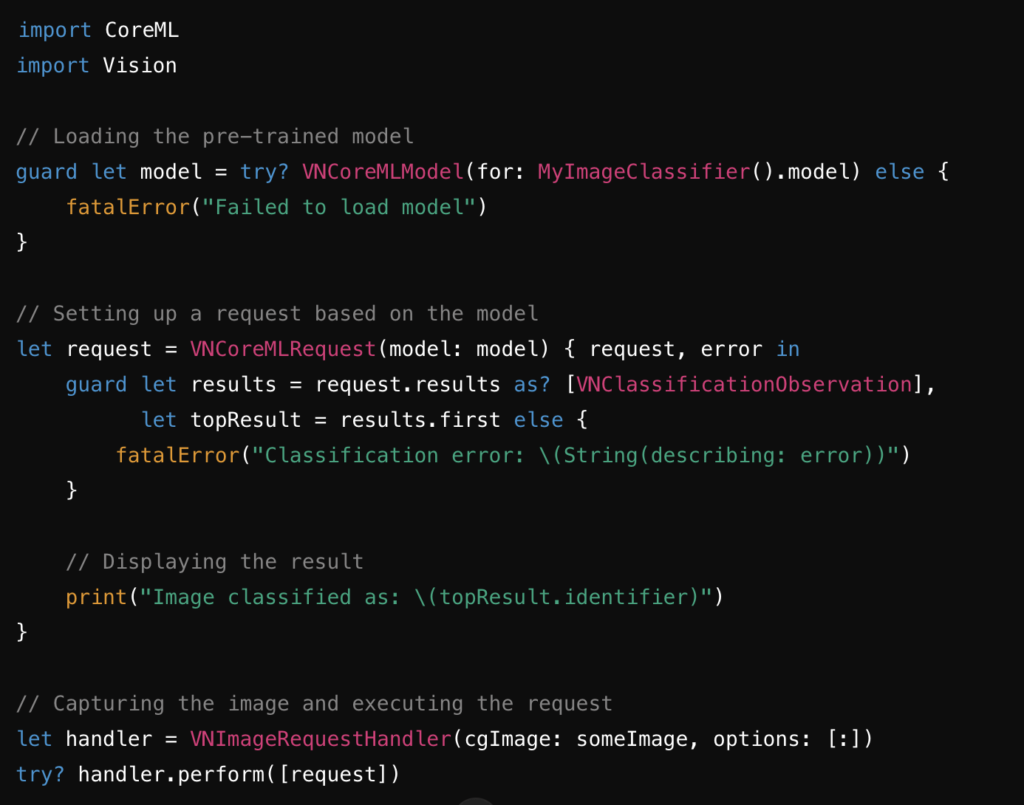

Here’s an example of using an image classification model in Swift:

This example demonstrates how to load a pre-trained image classification model and use it to make real-time predictions, integrating with the Vision framework for image analysis.

2. Text Analysis with the Natural Language Framework

The Natural Language framework offers efficient text processing capabilities. You can, for instance, analyze sentiments, identify named entities, or classify the language of the text.

Here’s an example of sentiment analysis in Swift:

Here, the Natural Language framework is used to classify the sentiment of the provided text. Depending on the content, the app can dynamically react, providing feedback to the user.

Using Siri and Smart Shortcuts

Apple Intelligence is also deeply integrated with Siri, allowing your apps to offer personalized voice commands and smart shortcuts. Using the Intents framework in Swift, you can create shortcuts that make it easier for users to interact with your app via voice commands.

Conclusion

Apple Intelligence is a powerful tool for any developer looking to implement advanced AI functionalities into their apps. By developing in Swift, you can take full advantage of this platform’s capabilities, from image analysis to text comprehension, creating smarter, more personalized, and responsive experiences.

Now is the perfect time to explore what Apple Intelligence can do for you and your users! ✨🚀

Learn more.

https://www.apple.com/apple-intelligence/

https://developer.apple.com/apple-intelligence/